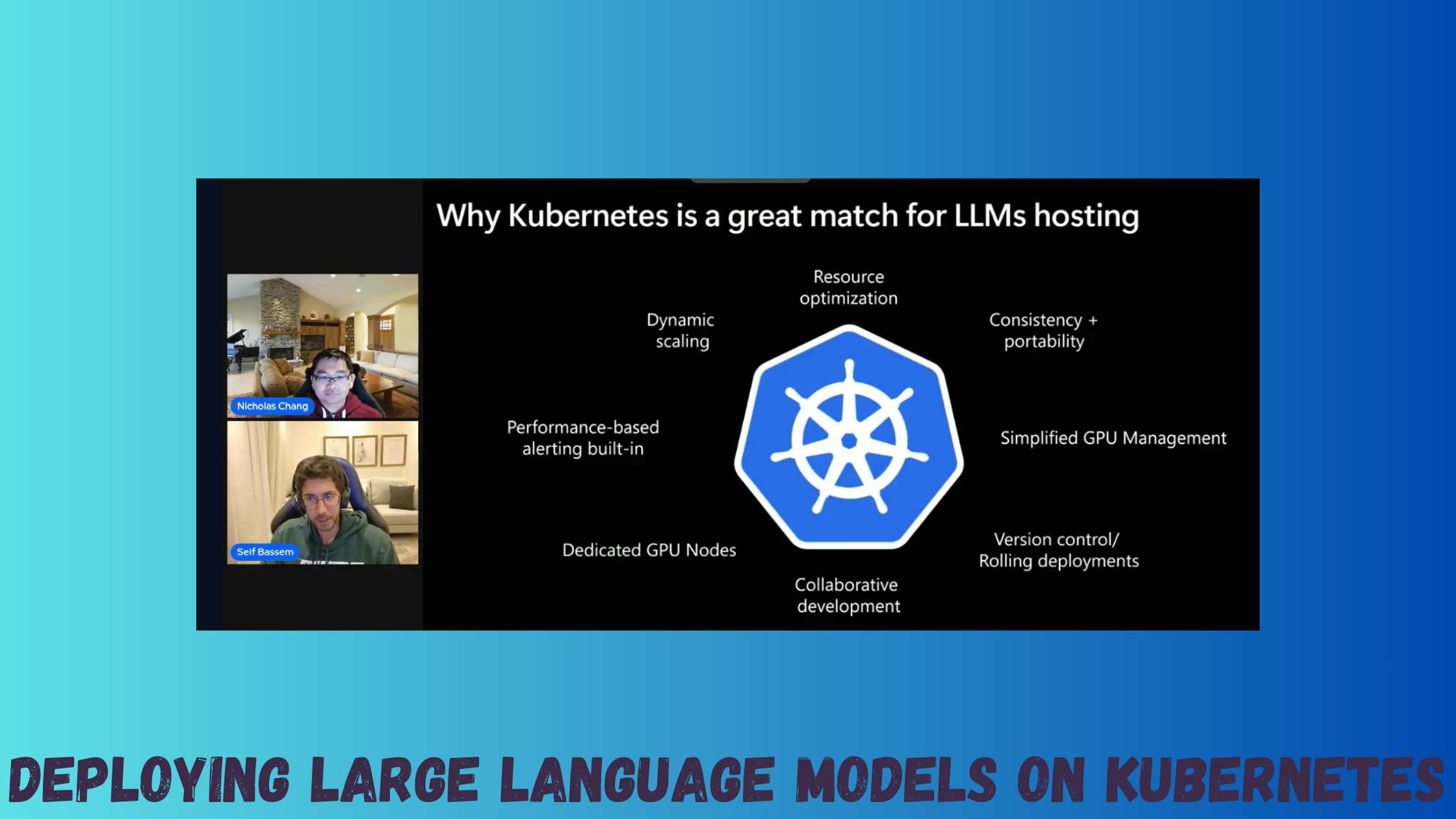

Deploying LLMs on Kubernetes

There is a growing demand in enterprises to deploy AI models and agents on their own infrastructure whether its on-premises or in the cloud, the main platform enabling this transformation is Kubernetes. Organizations doing that are usually looking for:

🔐 Data control & sovereignty (regulated industries) ⚙️ Infrastructure-level control and customization (GPUs, scheduling, scaling) 📉 Cost predictability at scale for sustained inference workloads 🧩 Platform consistency, where AI is just another workload on K8s 🛠️ Edge deployments for latency-sensitive and air-gapped scenarios

I had a great conversation with Nicholas Chang on the Microsoft Community Insights Podcast on that topic showcasing a demo deploying AI models on AKS with the Kubernetes AI Toolchain Operator (KAITO).

Share on:You May Also Like

Self-Hosting LLMs on Kubernetes: Serving LLMs using vLLM

Series map Introduction How LLMs and GPUs work? GPU optimization …

Self-Hosting LLMs on Kubernetes: GPU optimization

Series map Introduction How LLMs and GPUs work? GPU optimization …

Self-Hosting LLMs on Kubernetes: How LLMs and GPUs Work?

Series map Introduction How LLMs and GPUs work? GPU optimization …